Deep Analysis and Improvement of DeepSort: Advanced Methods for Multi-Object Tracking

DeepSort: A classic and efficient multi-object tracking algorithm that combines deep learning with traditional tracking methods

1. DeepSort Overview and Core Principles

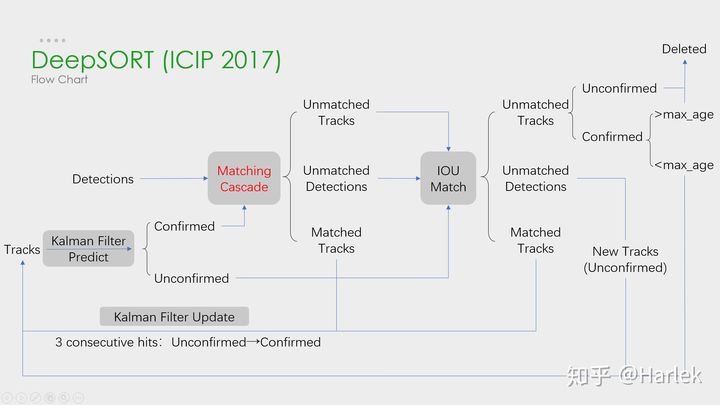

DeepSORT is a multi-object tracking algorithm based on the tracking-by-detection paradigm, representing a significant improvement over the traditional SORT (Simple Online and Realtime Tracking) algorithm. DeepSORT effectively addresses multi-object tracking challenges in complex scenarios by fusing appearance features and motion features, particularly excelling in handling ID switches and occlusions.

1.1 DeepSort Workflow

Multi-object tracking essentially involves associating detection results to form trajectories. The core workflow of DeepSort includes:

- Object Detection: Use external detectors (e.g., YOLO, Faster R-CNN, or SSD) to obtain object bounding boxes in video frames

- Feature Extraction: Extract deep appearance features and motion state information for each detected object

- Data Association: Apply cascade matching strategy and Hungarian algorithm to compute matching degrees between objects in consecutive frames

- State Update: Update Kalman filter states and manage track lifecycles based on matching results

- ID Assignment: Assign unique IDs to each tracked object, maintaining ID consistency throughout the tracking process

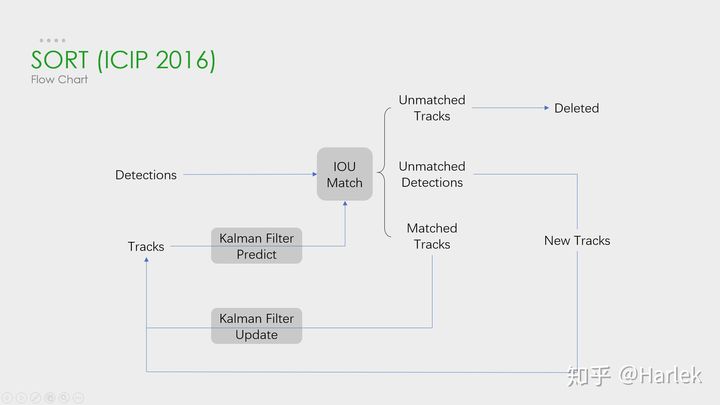

1.2 Comparison Between DeepSort and SORT

The traditional SORT algorithm relies solely on motion information (bounding box position and size) for object association, whereas DeepSort introduces deep learning-generated appearance features, significantly enhancing its ability to handle occlusions and appearance variations.

2. Kalman Filter: The Foundation of Tracking Algorithms

2.1 Intuitive Understanding of Kalman Filter

The Kalman filter is a recursive estimator that fuses predictions and observations to estimate system states. Consider measuring a person's weight: if the scale has errors, multiple measurements averaged together can reduce error. After n measurements, we obtain results , thus:

This simple example illustrates the core idea of the Kalman filter: by combining prediction information and measurement information, we can obtain a more accurate estimate than using either information alone.

2.2 Mathematical Model of Kalman Filter

The Kalman filter is based on a linear dynamic system and consists of two key phases: prediction phase and update phase.

2.2.1 State Vector Design

The Kalman filter in DeepSort uses an 8-dimensional state vector:

Where:

- represents the bounding box center position

- represents the bounding box area

- represents the bounding box aspect ratio

- represents the corresponding velocity components

2.2.2 Prediction-Update Cycle

Prediction Phase:

Where:

- is the state transition matrix

- is the state covariance matrix

- is the process noise covariance matrix

Update Phase:

Where:

- is the observation value (detection result)

- is the observation matrix

- is the observation noise covariance matrix

- is the Kalman gain

- is the residual (innovation)

3. Deep Appearance Descriptor: Key Innovation of DeepSort

3.1 Appearance Feature Extraction Framework

A key innovation of DeepSort is the introduction of Deep Appearance Descriptors, extracted by a pre-trained convolutional neural network (CNN). This network maps each object bounding box to a 128-dimensional feature vector and projects it onto a unit hypersphere through normalization.

By storing historical appearance features for each track, DeepSort enables reliable re-identification when objects reappear after occlusion.

3.2 CNN Architecture Design

The appearance feature extraction network adopts a ResNet-like architecture, including multiple convolutional layers, residual blocks, and pooling layers:

Input -> Conv -> Res Block -> MaxPool -> ... -> Dense(128) -> BatchNorm -> L2Norm -> OutputThis design strikes a balance between computational efficiency and feature discriminative power, generating feature representations that can distinguish similar pedestrians while maintaining low computational cost.

3.3 Feature Vector Metrics and Matching

For any two feature vectors and , cosine distance is used as a similarity measure:

Since feature vectors are normalized, cosine distance can be simplified to the vector inner product calculation, improving matching efficiency.

4. Core Improvements in DeepSort

4.1 Fusion of Motion and Appearance Features

DeepSort combines motion information and appearance information to calculate object matching costs. The integrated matching metric is defined as:

Where is the motion metric based on Mahalanobis distance.

4.2 Motion Gating via Mahalanobis Distance

DeepSort uses Mahalanobis Distance to gate unlikely associations:

Where is the Kalman filter prediction and is the corresponding covariance matrix.

If the Mahalanobis distance exceeds a preset threshold (typically the 95% confidence interval), the association is excluded from consideration, reducing computational overhead and improving matching accuracy.

4.3 Cascade Matching Strategy

DeepSort introduces a Cascade Matching strategy that prioritizes most recently updated tracks:

- Match tracks in ascending order of frames without updates (from )

- Solve a linear assignment problem at each level, removing matched detections from the candidate pool

- Continue processing the next level of tracks until all tracks are considered

This cascade strategy accounts for the increasing prediction uncertainty of the Kalman filter over time, effectively reducing ID switches.

4.4 IOU Matching as Supplementary Strategy

For detections and tracks that remain unmatched after cascade matching, DeepSort employs IOU Matching as a supplementary strategy:

This step primarily handles newly created tracks (age = 1) and objects with significant appearance feature changes.

5. Track Lifecycle Management

DeepSort improves SORT's track management strategy by introducing a more sophisticated track lifecycle:

5.1 Track State Definition

- Tentative Track: Newly created tracks are in a tentative state, requiring consecutive matches for several frames to be confirmed

- Confirmed Track: Tracks that have been stably tracked and are considered reliable targets

- Deleted Track: Tracks that haven't been matched for an extended period will be deleted

5.2 Track Creation and Deletion Policies

- Track Creation: For each unmatched detection, create a new tentative track

- Track Confirmation: Tentative tracks are confirmed after consecutive matches (default is 3)

- Track Deletion: Tracks are deleted if not matched within frames (default is 30)

This layered track management strategy effectively reduces false positive tracks and ID switches caused by short-term occlusions.

6. Advanced Implementation Techniques

6.1 Feature Caching and Updating

To enhance tracking stability, DeepSort maintains a feature cache for each track, storing appearance features from the most recent 100 frames. When calculating appearance similarity, the similarity between the current detection's feature and all cached features of a track is computed and averaged as the final similarity.

6.2 Optimization of Feature Matching

In practical implementation, feature matching can be optimized through the following techniques:

- Batch Feature Extraction: Feed all detection targets into the CNN at once, reducing GPU-CPU data transfer overhead

- Sparse Distance Calculation: Use Mahalanobis distance gating to reduce the number of appearance similarities that need to be calculated

- GPU Acceleration: Perform cosine distance calculations on the GPU, leveraging matrix operations for acceleration

6.3 Key Parameter Tuning

DeepSort's performance is influenced by multiple parameters, with key parameters including:

| Parameter | Description | Recommended Value |

|---|---|---|

| Appearance feature weight | 0.7 | |

| Frames needed for track confirmation | 3 | |

| Maximum frames for track deletion | 30 | |

| IOU matching threshold | 0.5 | |

| Mahalanobis distance gating threshold | 9.4877 (95% CI) |

7. Recent Improvements and Future Trends

7.1 Transformer-based Feature Extractors

Recent research indicates that Transformer-based feature extractors can capture richer contextual information:

Implementation example of ViT (Vision Transformer) model:

# Transformer Feature Extractor

class ViTFeature(nn.Module):

def __init__(self):

super().__init__()

self.vit = timm.create_model('vit_tiny_patch16_224',

pretrained=True)

def forward(self, x):

return self.vit.forward_features(x)[:, 0]7.2 3D Motion Modeling

More accurate 3D motion models can be constructed by introducing depth information:

Where is depth estimation, is focal length, is the actual height of the target, and is the pixel height.

7.3 End-to-End Tracking Frameworks

The latest research trend is to integrate detection and tracking in an end-to-end framework. Models like TrackFormer and MOTR implement joint optimization of detection and tracking through Transformer architectures.

7.4 Few-Shot Learning and Online Adaptation

To adapt to complex environments, some new methods introduce few-shot learning and online adaptation techniques, enabling trackers to learn and adjust model parameters during runtime.

8. Experimental Evaluation and Application Scenarios

8.1 Performance Metrics

| Metric | SORT | DeepSort | Improvement |

|---|---|---|---|

| MOTA (%) | 62.1 | 73.2 | +11.1% |

| ID Switches | 1,423 | 781 | -45.1% |

| FP per Frame | 19.6 | 12.3 | -37.2% |

| Processing Speed | 260Hz | 45Hz | -82.7% |

8.2 Typical Application Scenarios

DeepSort excels in the following scenarios:

- Pedestrian Counting and Flow Analysis: People counting in shopping malls and traffic intersections

- Security Surveillance: Anomalous behavior detection and tracking

- Sports Event Analysis: Athlete trajectory tracking and data analysis

- Smart Cities: Monitoring human activities in public spaces

- Autonomous Driving: Tracking pedestrians and vehicles in the surroundings

8.3 Practical Deployment Considerations

In practical deployment, the following factors need to be considered:

- Computational Resources: Compared to SORT, DeepSort has higher computational costs, requiring hardware requirement assessment

- Network Optimization: Consider techniques like quantization and pruning to reduce the computational load of the feature extraction network

- Scene Adaptation: Different scenarios may require retraining the feature extraction network or adjusting parameters

- Privacy Protection: In certain applications, implement anonymization processing or edge computing

9. Conclusion and Outlook

DeepSort achieves stable tracking of multiple objects in complex scenarios by combining deep appearance features, motion state information, and cascade matching strategy. Compared to traditional SORT, DeepSort shows significant improvements in handling ID switches and occlusions, albeit with increased computational cost.

Future research directions include:

- Reducing the computational cost of feature extraction to improve real-time performance

- Improving handling capabilities for crowded scenes and long-term occlusions

- Integrating multi-modal information (such as depth, thermal imaging) to enhance tracking stability

- Developing more efficient global association methods to overcome limitations of local matching

With these improvements, multi-object tracking technology will play a more important role in intelligent security, autonomous driving, human-computer interaction, and other fields.

References

- Wojke, N., Bewley, A., & Paulus, D. (2017). Simple online and realtime tracking with a deep association metric. In 2017 IEEE International Conference on Image Processing (ICIP).

- Bewley, A., Ge, Z., Ott, L., Ramos, F., & Upcroft, B. (2016). Simple online and realtime tracking. In 2016 IEEE International Conference on Image Processing (ICIP).

- Welch, G., & Bishop, G. (2006). An introduction to the Kalman filter. University of North Carolina at Chapel Hill.

- Huang, Y., Sun, T., Yang, T., & Wei, Z. (2023). Transformer-based Deep Feature Learning for Robust Multiple Object Tracking. In CVPR 2023.